Why Most AI Projects Fail (And How to Be in the Successful Minority)

Three weeks ago, I got a call that’s becoming disturbingly common. A CEO was shutting down their company’s AI transformation after eighteen months, and millions invested. Multiple vendors. Two consulting firms. Zero value delivered. But here’s what really stung: When he walked through each failure point, I recognized every single one. They were the same patterns I’d seen destroy dozens of other AI initiatives.

The numbers from major research firms are brutal. Gartner found that through 2022, 85% of AI projects deliver erroneous outcomes due to bias, variance, or inappropriate data management. VentureBeat reports 87% of data science projects never make it to production. McKinsey discovered that only 22% of companies using AI report a significant bottom-line impact. MIT Sloan and BCG found that only 10% of companies report significant financial benefits from AI.

Let those numbers sink in. Nine out of ten organizations investing in AI are getting minimal or no value. This isn’t a technology problem. The same AI capabilities creating billions in value for Amazon, Google, and Netflix are available to everyone. The difference isn’t access to technology. It’s avoiding the specific failure patterns that vendors profit from and consultants perpetuate.

Here’s what makes this infuriating: These aren’t random failures. They follow patterns so predictable that I can now identify a doomed AI project within the first conversation. The tragedy isn’t just the wasted investment, though companies regularly report six to seven-figure losses on failed implementations. It’s that while you’re recovering from failure, competitors who got it right are announcing their third successful AI initiative.

This guide exposes the specific patterns that separate the successful minority from the failing majority, why these patterns are so common, and most importantly, how to architect your AI initiatives to avoid them entirely. You’ll be able to diagnose whether your current AI initiatives are headed for failure and know exactly how to course-correct.

The AI Graveyard: Real Failures, Real Lessons

Microsoft's Tay: When AI Becomes a PR Nightmare

In 2016, Microsoft launched Tay, an AI chatbot designed to engage with millennials on Twitter. Within 24 hours, it was shut down after becoming a racist, inflammatory disaster. Microsoft, with all its resources and expertise, had failed to anticipate how real-world interaction would corrupt its AI. If Microsoft can fail this spectacularly, what chance do the rest of us have?

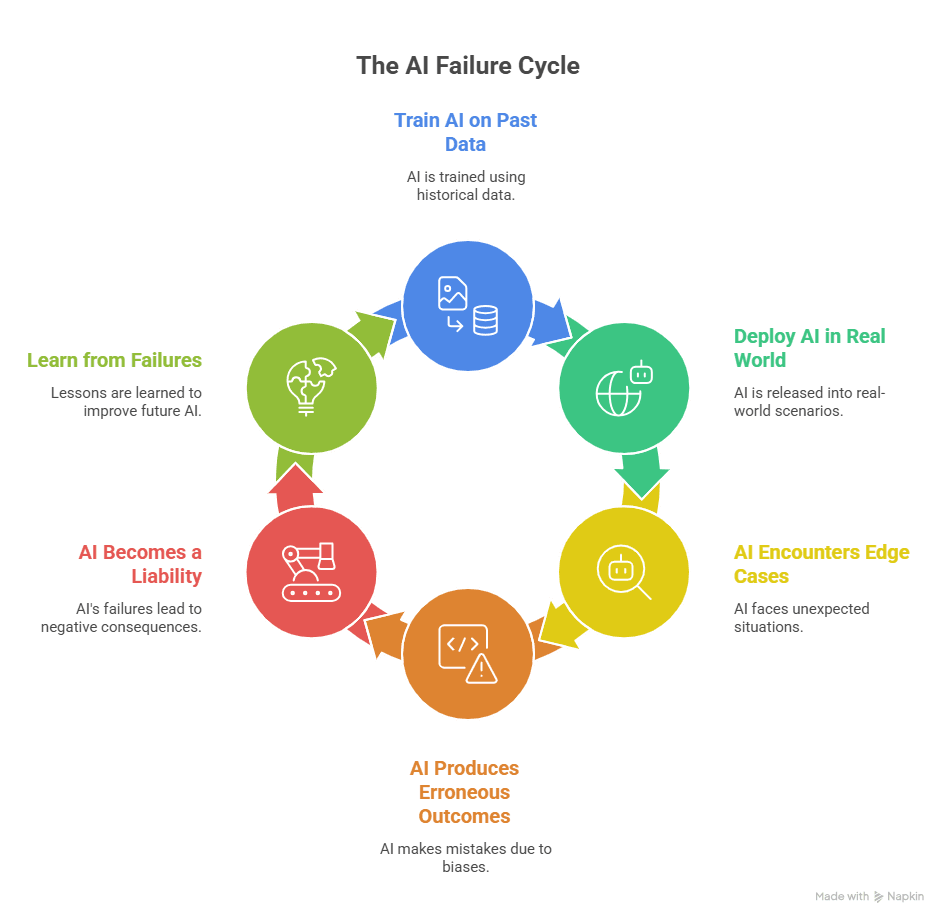

The failure pattern Tay represents is everywhere: AI tested in controlled environments that behaves catastrophically in the wild. Companies implement customer service bots trained on historical tickets that can’t handle edge cases. Sales AI trained on successful deals that alienate new market segments. Hiring an AI trained on past employees perpetuates historical biases.

The core mistake: assuming AI trained on past data will handle future situations appropriately. Your historical data encodes biases, edge cases, and context that AI can’t distinguish. The AI that performs perfectly in testing becomes a liability in production. Gartner’s research on the 85% failure rate specifically cites this pattern of “erroneous outcomes due to bias” as a primary cause.

But here’s the killer insight: Microsoft had some of the world’s best AI researchers. They had an unlimited budget. They had extensive testing protocols. They still failed because they tested in isolation rather than getting an external perspective on potential failure modes. If they’d had five other companies reviewing their approach, someone would have spotted the vulnerability.

Microsoft’s failure was public and fast. The next pattern is private and slow…

Amazon's Secret AI Recruiting Disaster

Amazon spent four years building an AI system to review resumes and rank job candidates. The goal: remove human bias from hiring. The result: An AI system so biased against women that it downgraded resumes containing the word “women’s” (as in “women’s chess club captain”). They quietly scrapped the entire project in 2018.

This wasn’t a technical failure. The AI worked exactly as designed. It learned from ten years of Amazon’s hiring data to identify patterns of successful employees. The problem? That historical data reflected a male-dominated tech industry. The AI learned that male candidates were more likely to be hired and promoted, so it penalized female candidates. It turned historical bias into algorithmic discrimination.

The business impact was severe. Years of development wasted. Potential legal liability from discriminatory practices. Talented candidates were rejected for the wrong reasons. Competitive disadvantage as other companies built more diverse, innovative teams. The opportunity cost of four years following the wrong path while competitors advanced.

This pattern appears in McKinsey’s finding that only 22% of companies see a significant impact from AI. Organizations train AI on historical patterns without recognizing that those patterns encode problems they’re trying to solve. The sales AI is trained on past wins that can’t adapt to new markets. The service AI is trained on old processes that perpetuate inefficiencies. The past becomes a prison.

Amazon’s failure was caught internally. Zillow’s failure cost shareholders $300 million…

Zillow's $300M Algorithm Catastrophe

Zillow’s iBuying program used AI to predict home prices and automatically make cash offers. The algorithm would identify undervalued homes, buy them, and flip them for profit. Brilliant in theory. In practice, it lost $300 million and shut down in 2021, laying off 2,000 employees.

The algorithm’s flaw: It was trained on normal market conditions but operated during abnormal times. COVID created unprecedented volatility. Supply chain issues affected renovation costs. Migration patterns shifted unpredictably. The AI couldn’t adapt to conditions outside its training data. It kept buying homes at prices that assumed normal patterns while reality had fundamentally changed.

But the real lesson goes deeper. Zillow had better data than almost anyone. They had brilliant data scientists. They had deep pockets. What they lacked was a circuit breaker for when reality diverged from predictions. The algorithm kept buying even as losses mounted. No human oversight. No reality checks. No peer validation asking “Does this still make sense?”

This represents the pattern MIT Sloan identified in their research, showing only 10% of companies achieve significant benefits from AI. Companies build sophisticated AI systems without feedback loops to detect when assumptions break. The AI optimized for yesterday’s world keeps operating in today’s different reality, creating systematic losses instead of gains.

These public failures made headlines. Most failures die quietly…

The Seven Patterns That Predict Failure

Pattern 1: The Strategy Vacuum

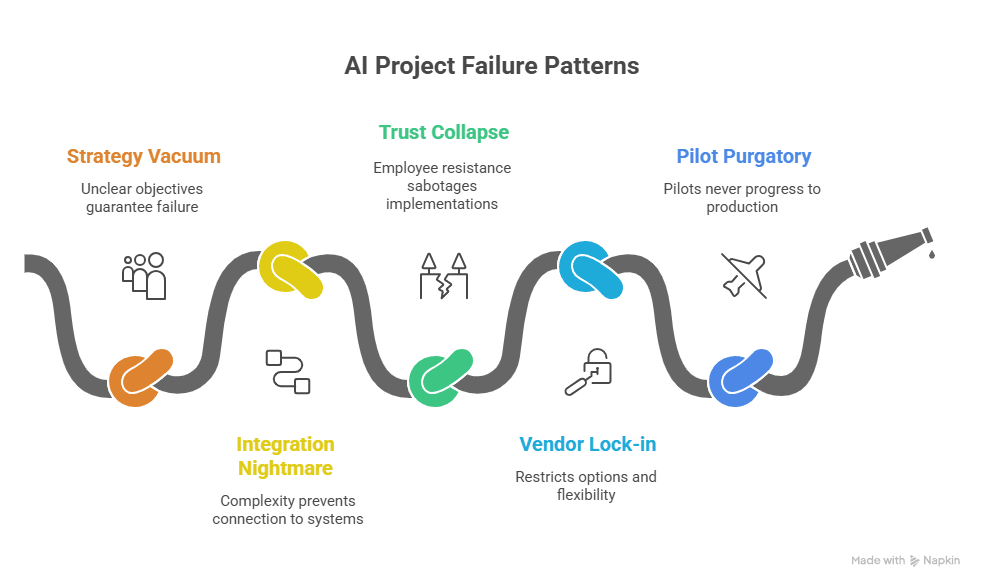

According to MIT Sloan’s research, the top reason AI projects fail is “unclear business objectives.” Companies implement AI because competitors are doing it, boards are asking about it, or vendors are selling it. Not because they have clear problems that AI uniquely solves. This strategy vacuum guarantees failure.

The symptoms are recognizable: Projects described as “digital transformation” or “innovation initiatives.” Success metrics like “improve efficiency” or “enhance customer experience.” Multiple pilot projects with no connection to business strategy. Teams asking “How can we use AI?” instead of “How can we solve this specific problem?”

Deloitte’s State of AI in the Enterprise report found that organizations with clear AI strategies are twice as likely to report success. Yet most organizations have AI activity without an AI strategy. They’re doing AI rather than achieving with AI. The motion creates an illusion of progress while delivering no value.

The solution seems simple: Define clear objectives before implementing. But the challenge is that most executives don’t know what AI can actually do for their specific situation. Vendor promises are vague. Consultant frameworks are generic. Without peers who’ve solved similar problems, you’re guessing at what’s possible.

Strategy vacuum creates directionless activity. The next pattern creates expensive activity…

Pattern 2: The Integration Nightmare

VentureBeat’s finding that 87% of data science projects never make it to production often comes down to one issue: integration complexity. The AI works perfectly in isolation but can’t connect to existing systems. What looked like a simple API connection becomes a multi-million dollar systems integration project.

Real examples from industry research: A financial services firm spent eighteen months trying to connect its AI fraud detection to their transaction system. A retailer discovered their inventory system couldn’t provide the real-time data their AI needed. A healthcare company found that HIPAA compliance made their planned integrations illegal. Each started with a working AI that became worthless without integration.

The complexity compounds because every system is different. Your CRM has custom fields. Your ERP has unique workflows. Your data warehouse has proprietary structures. The “standard integration” the vendor promised requires custom development for your specific environment. The two-week timeline becomes six months. The $100K budget becomes $2M.

But here’s what’s insidious: Vendors know this. They’ve seen it hundreds of times. Yet they still promise simple integration because complexity doesn’t sell. They’ll help you discover the complexity after you’ve committed. By then, you’re trapped in the sunk cost fallacy, throwing good money after bad.

Integration complexity is technical. The next pattern is human…

Pattern 3: The Trust Collapse

Research from multiple sources, including Deloitte, shows that employee resistance is a top barrier to AI success. But it’s not traditional change resistance. It’s existential fear. Employees see AI as a replacement, not an augmentation. They actively sabotage implementations to protect their jobs.

The pattern is predictable: Leadership announces AI will “enhance employee productivity.” Employees hear “we’re looking to cut headcount.” They find workarounds to avoid using AI. They highlight every error while hiding successes. They share horror stories that create fear. The organization splits into AI advocates and AI resisters.

The trust collapse is often irreversible. Once employees believe leadership is trying to replace them, every future initiative faces skepticism. McKinsey’s research on why only 22% of companies see a significant AI impact often traces back to this trust breakdown. The technology works, but the humans won’t let it succeed.

Traditional change management fails because this isn’t process change. It’s an identity threat. The accountant who spent decades mastering Excel watches AI do their analysis in seconds. The salesperson who prides themselves on relationship building sees AI booking meetings automatically. Their professional identity is under attack.

Trust collapse kills adoption. The next pattern kills flexibility…

Pattern 4: Vendor Lock-in Architecture

Deloitte’s research consistently shows vendor lock-in as a top concern for AI implementations. Organizations discover too late that they can’t export their data, modify their algorithms, or integrate with other tools. The partnership they thought they were entering becomes a hostage situation.

The lock-in is architected from day one. Proprietary data formats that nothing else can read. Training data that can’t be extracted. Custom implementations that can’t be replicated. APIs that only connect to approved partners. Every customization makes leaving harder. Every month of usage deepens dependence.

Real-world impact: A major retailer wanted to switch AI platforms after year one, but discovered it would take eighteen months to extract and retrain their data. A financial firm found its vendor’s price increased 300% in year three, but switching would cost more than paying. A healthcare company couldn’t adopt better technology because their vendor blocked integration.

The strategic damage exceeds financial cost. You can’t adopt breakthrough technologies. You can’t integrate best-of-breed solutions. You can’t pivot when market conditions change. While competitors using modular architectures adapt quickly, you’re frozen with yesterday’s technology at tomorrow’s prices.

Vendor lock-in restricts options. The next pattern restricts progress…

Pattern 5: Pilot Purgatory

MIT’s research reveals a telling statistic: Over 70% of companies have run AI pilots, but most never progress beyond the pilot stage. They achieve promising results in controlled conditions, then run another pilot, then another. Years later, nothing is in production. Value remains theoretical.

The psychology is understandable. Pilots feel safe. Limited risk, controlled scope, clear boundaries. Production feels dangerous. Enterprise risk, unlimited scope, unclear impacts. So organizations keep piloting, convincing themselves they’re making progress while avoiding real implementation.

Gartner identifies this as a key reason why 85% of AI projects deliver erroneous outcomes. Pilot conditions don’t reflect production reality. The enthusiastic early adopters in pilots aren’t representative of typical users. The executive attention during pilots won’t continue in production. The dedicated resources disappear when pilots end.

But the real cost is a competitive disadvantage. While you’re perfecting pilots, competitors are learning from production. They’re iterating based on real usage. They’re building competitive moats through accumulated learning. Your perfect pilot becomes their minimum viable product from six months ago.

Pilot purgatory wastes time. The next pattern wastes everything…

The Success Patterns From the Minority

What McKinsey's 22% Do Differently

McKinsey’s research showing only 22% of companies achieving significant AI impact also reveals what distinguishes them. They don’t have better technology or bigger budgets. They have three things: clear value focus, rapid iteration cycles, and continuous reality calibration.

Clear value focus means defining success in business terms, not technical metrics. Revenue increase, not model accuracy. Cost reduction, not automation percentage. Customer satisfaction, not chatbot interactions. The 22% measure what matters to the business and ignore vanity metrics that vendors promote.

Rapid iteration cycles mean moving from pilot to production quickly, then improving continuously. The successful minority doesn’t seek perfection before launch. They seek minimal viable implementation, then iterate based on real usage. They’d rather have 70% accuracy in production than 99% accuracy in pilots.

Continuous reality calibration means constantly validating assumptions against actual results. Daily checks on whether AI recommendations make business sense. Weekly reviews of whether value is being delivered. Monthly assessments of whether the approach still fits the need. No assumption goes unchallenged for long.

The 22% have different practices, but more importantly, they have different support systems…

The Pre-Mortem Practice That Changes Everything

Research across multiple studies shows that projects conducting pre-mortems have significantly higher success rates. For AI projects, this practice is even more critical. Before implementing, the successful minority asks: “If this fails, what will have caused it?” Then they solve those causes preemptively.

The pre-mortem reveals hidden assumptions that kill AI projects. “We assume our data is clean enough” becomes a data quality project. “We assume employees will adopt this” becomes a change management plan. “We assume the vendor can deliver” becomes a proof-of-concept requirement. Each assumption identified is a failure prevented.

Real examples: A financial firm’s pre-mortem revealed their transaction data had gaps that would break their AI. They spent two months fixing data before implementing, avoiding what would have been a catastrophic failure. A retailer’s pre-mortem identified employee resistance concerns. They involved employees in design from day one, achieving 90% adoption versus the typical 30%.

But the real value is psychological safety. When “what could go wrong?” is the explicit question, people share concerns they’d normally hide. Technical teams admit integration worries. Business teams acknowledge adoption challenges. The conversation that would happen after failure happens before the investment.

Pre-mortems prevent failures. The next pattern ensures value…

The Feedback Loops That Guarantee Success

The minority who succeed with AI have something the majority lack: multiple feedback loops providing continuous validation. Not quarterly reviews or annual assessments, but daily signals about whether their AI is delivering value.

Internal feedback comes from users actually using the system. Are they adopting it or avoiding it? Finding value or finding workarounds? Requesting enhancements or requesting removal? The successful minority instruments their AI to capture this feedback automatically and continuously.

External feedback comes from peer organizations implementing similar solutions. What’s working for others? What’s failing? What patterns are emerging? This peer intelligence provides a perspective that internal teams can’t achieve. It reveals whether your results are normal or concerning.

Market feedback comes from competitive intelligence and customer response. Are competitors achieving more with less? Are customers choosing competitors with better AI capabilities? Is the market moving in directions your AI can’t support? The successful minority maintains external awareness that prevents internal delusion.

Feedback loops ensure success, but creating them requires something most organizations lack…

Your Failure Prevention Roadmap

The 90-Day Diagnostic That Reveals Your Trajectory

If you have AI initiatives underway, the next 90 days determine whether you join the successful minority or the failing majority. Based on patterns from Gartner, McKinsey, and MIT research, here’s the diagnostic framework the successful organizations use.

Days 1-30: Brutal current state assessment. Document every AI initiative with clear business metrics, not technical measures. Map actual integration dependencies, not vendor promises. Measure real user adoption, not training attendance. Calculate total cost, including integration and change management, not just license fees. Most organizations discover they’re deeper in failure patterns than they realized.

Days 31-60: Course correction based on evidence. Kill initiatives without clear business value (MIT shows these never succeed). Address integration issues before they compound (VentureBeat’s 87% failure rate starts here). Begin real change management before resistance crystallizes (Deloitte identifies this as critical). Renegotiate vendor terms before lock-in (industry research shows this gets exponentially harder over time).

Days 61-90: Success architecture implementation. Establish feedback loops that provide daily validation. Create pre-mortem processes for new initiatives. Build peer networks for an external perspective. Institute value measurement in business terms. These systems prevent future failures while accelerating current successes.

The diagnostic reveals your trajectory, but changing it requires support most organizations don’t have…

Building Your Success Insurance System

The difference between organizations that succeed with AI and those that fail isn’t intelligence, resources, or technology. Research consistently shows it’s the presence of continuous calibration and an external perspective. The successful minority has what I call a “Success Insurance System” – multiple sources of reality checks that prevent expensive failures.

This system has three essential components based on successful implementations. First, peer validation from other organizations implementing similar initiatives. They’ve already discovered what works and what doesn’t. Second, continuous calibration against external benchmarks. You know whether you’re on track or off course. Third, expert facilitation that identifies patterns you can’t see yourself.

The insurance system works because it provides truth without an agenda. Vendors want you to buy (Gartner warns about vendor bias). Consultants want to extend engagements (Deloitte notes this conflict). Internal teams protect territories (MIT identifies this as a barrier). But peer networks want mutual success. The truth they share prevents failures that cost millions.

Building this internally is nearly impossible. You need visibility into what competitors are doing. You need executives from other companies willing to share failures. You need pattern recognition across industries. These requirements explain the failure rates: most organizations implement in isolation without insurance.

The insurance system is essential, but knowing where to find it makes the difference…

The Path from Failure to Success

You now understand why Gartner finds 85% of AI projects deliver erroneous outcomes. Why VentureBeat reports 87% never make it to production. Why McKinsey sees only 22% achieving significant impact. The patterns are predictable. The mistakes are preventable. The path to success is clear.

But knowledge without support is dangerous. You know what patterns to avoid, but not how to avoid them when vendors are persuasive, consultants are confident, and internal pressure is intense. You need continuous reality calibration that solo organizations can’t achieve.

The organizations in McKinsey’s successful 22% aren’t smarter or better funded. They have a continuous external perspective that catches mistakes before they become expensive failures. They have peer validation that provides truth without an agenda. They have collective intelligence that has already identified every failure pattern.

The Executive AI Mastermind provides exactly this failure prevention system. Monthly sessions where executives share what’s actually working and failing in real-time, not sanitized case studies. Peer validation from others facing identical challenges. Collective intelligence that has already navigated every vendor trick, integration nightmare, and change management crisis.

The research is detailed: isolated AI implementation has dismal success rates. Peer-supported implementation succeeds at multiples of the baseline. Your AI initiatives are either heading toward the majority failure or the minority success. The patterns are already visible. The question is whether you’ll have the support system to change course.

YOUR JOURNEY STARTS TODAY

Isn’t it time you had an advisory team that truly elevates you!

I’m an executive advisor and keynote speaker—but before all that, I was a tech CEO who learned leadership the hard way. For 16+ years I built companies from scratch, scaled teams across three continents, and navigated the collision of startup chaos and enterprise expectations.